Introduction

Prior to 2017, neural network translation relied almost entirely on sequential processing, reading sentences word by word. This approach made capturing long distance relationships both slow and imprecise.

Then came the game changer. In June 2017, Google researchers unveiled a preprint titled “Attention Is All You Need.” By December, its NeurIPS paper formalized the Transformer, a deep learning architecture that replaced recurrence with self attention.

Building on this novel self attention mechanism, the team utilized the open source Tensor2Tensor library and achieved remarkable improvements in English to German and English to French translation quality, while simultaneously reducing training times and boosting accuracy.

In this article, I will explore the Transformer’s journey before and after its inception and examine how it has reshaped the technology landscape, and consider its ongoing impact on the future.

The World Before Transformers

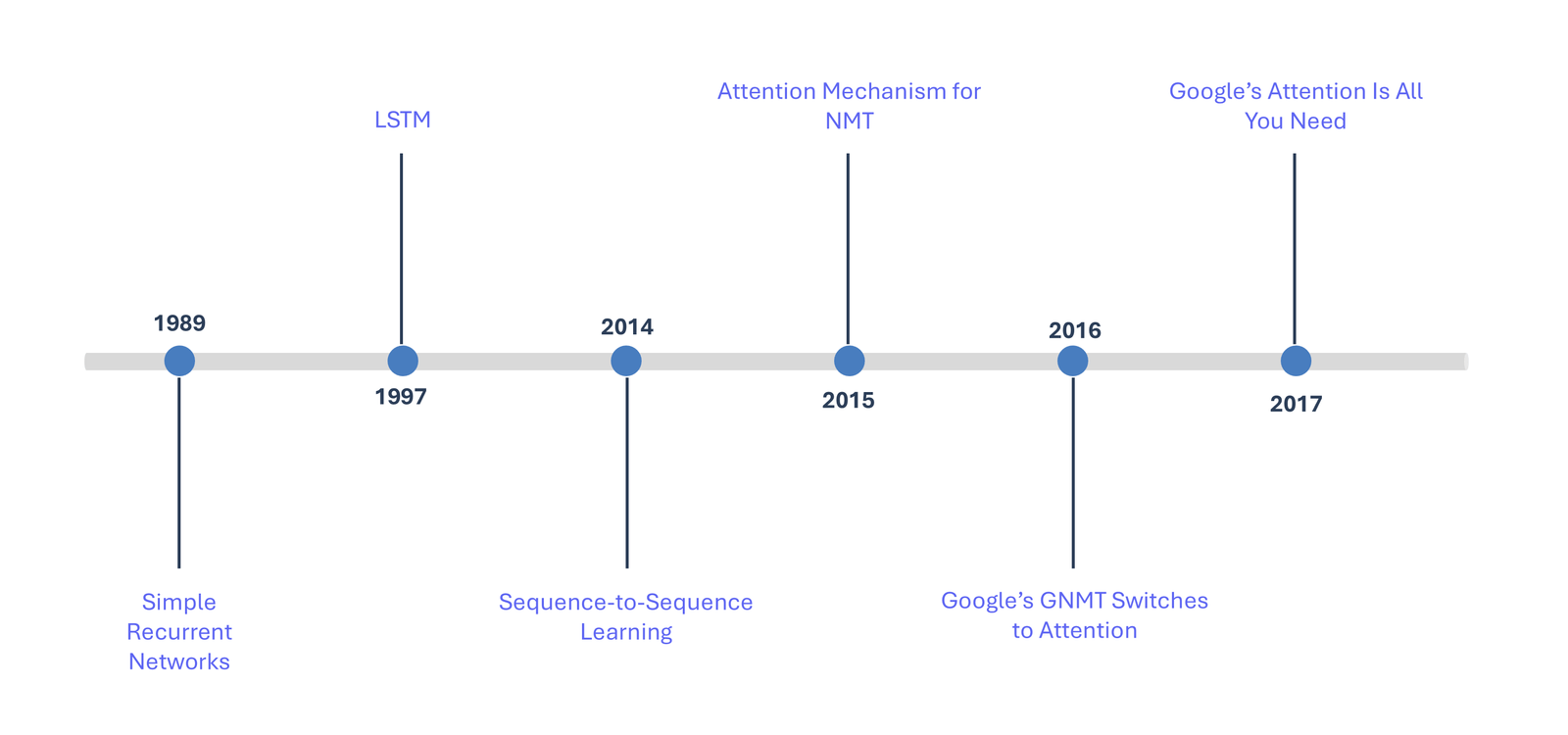

Before the advent of Transformers, recurrent neural networks (RNN) and long short term memory (LSTM) models were the primary tools for natural language understanding and machine translation. An RNN processes data one step at a time. At each step, it feeds its output back into itself, allowing the network to remember prior context. LSTMs build upon this by introducing specialized gates designed to capture and retain long distance dependencies within sequences.

While these sequential models performed well on smaller tasks, they revealed significant limitations when handling lengthy passages of text or continuous speech. Translation quality often deteriorated mid sentence, subtle meanings were lost, and errors sometimes compounded unpredictably. Moreover, training and running RNNs or LSTMs at scale required substantial computational resources but yielded only incremental accuracy improvements.

Figure 01: World Before Transformers

For enterprise teams managing real world applications, whether multilingual customer support, conversational chatbots, or large scale content generation, the trade off between cost and consistency quickly became a bottleneck. This challenge paved the way for a fresh approach that could overcome the constraints of sequential processing.

The Birth of Transformers

Google Brain, a specialized deep learning team within Google Research, published the pivotal paper “Attention Is All You Need” (Vaswani et al., 2017), authored by Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin.

The paper proposed a straightforward yet revolutionary architecture called the Transformer, which relies exclusively on the attention mechanism and replaces recurrence, convolution, and gating mechanisms. It also introduced positional encodings to help the model understand word order, as well as multiple attention heads to capture diverse relationships simultaneously.

Imagine a translator that can scan an entire sentence in a single glance, instantly assessing every word and its context before choosing the best translation. That is essentially what the Transformer does, it looks around rather than stepping through word by word. This capability unlocked far richer translations and drastically reduced training times by processing tokens in parallel rather than sequentially.

Understanding Transformer Architecture

To give you a heads up, I have simplified the technical details and underlying mathematics from the “Attention Is All You Need” paper with the intention of making it easier for you to digest the content. If needed, please revisit this section.

-

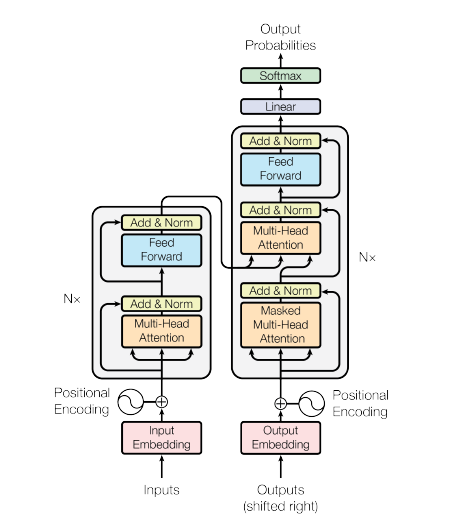

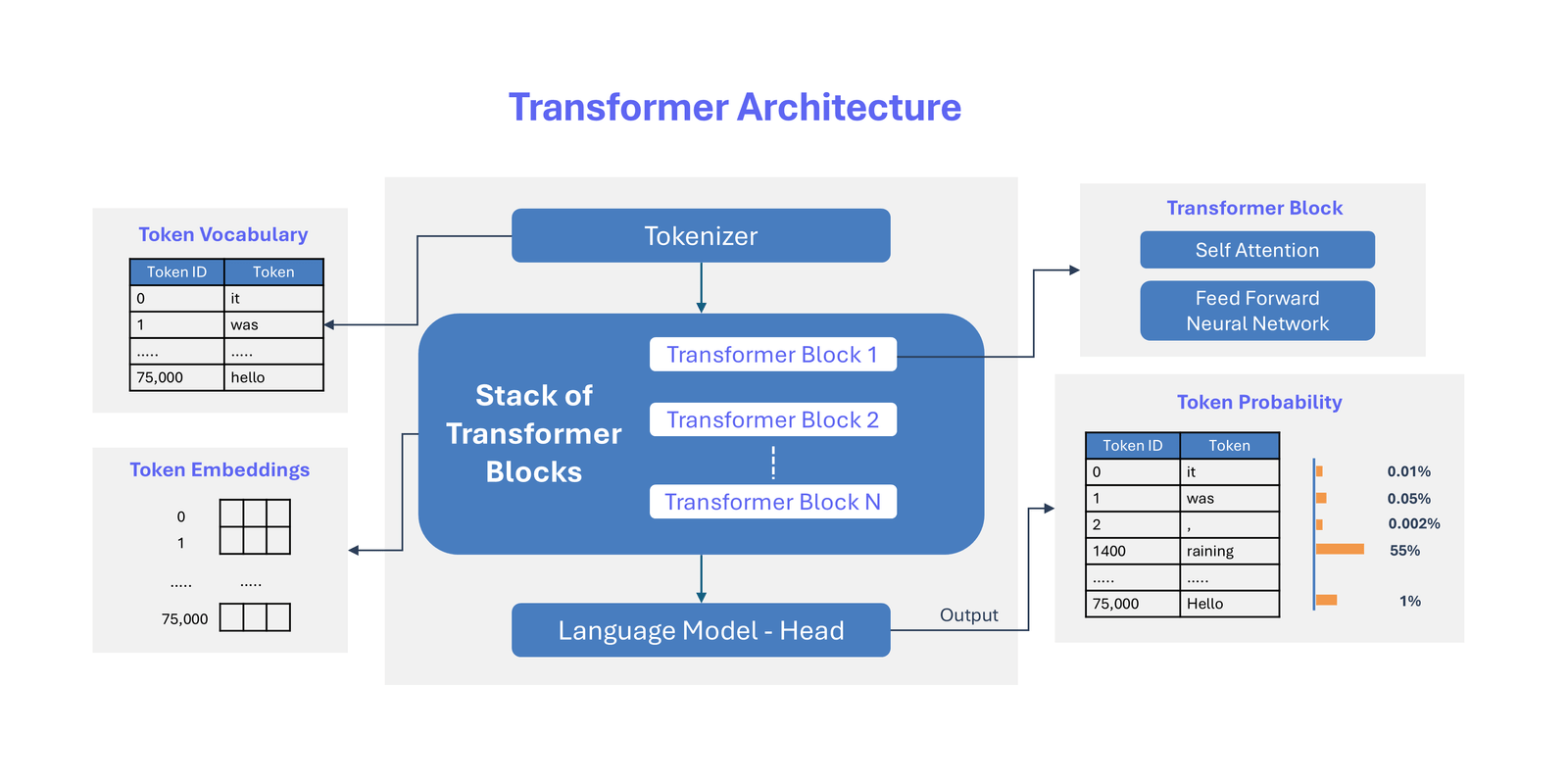

Architectural Overview

The following diagram illustrates the Transformer architecture as presented in the original paper. A simplified version of this architecture is used in the subsequent diagram.

Figure 02: Transformer Architecture as Presented in the Original Paper

The Transformer architecture consists of three major components:

-

- Tokenizer

- Transformer Blocks,

- The Language Model (LM) Head

Tokenization is the process of breaking a sentence into smaller units known as tokens, where each token typically represents a word or sub word.

The majority of the computation and neural network operations occur within the Transformer blocks.

The output of the stacked Transformer blocks is passed to the Language Model head, where token scoring takes place.

I have adapted Jay Alammar’s simplified architecture diagram, which he presents inverted from bottom to top. This orientation makes it easier to follow the architectural flow from top to bottom. For a deeper understanding, I recommend visiting his blog, The Illustrated Transformer. Additionally, if you are interested in how “How Transformer LLMs Work“, the short course offered by DeepLearning.AI is highly valuable.

-

Transformer Block Components

The Transformer block itself is composed of two key components: Self Attention and the Feed Forward Neural Network (FFNN). Self Attention weighs and integrates tokens based on the input sequence, capturing context and relationships through relevance scoring and information aggregation. Also, I briefly touch on the details of weighing and scoring in a section to follow.

To understand how the Feed Forward Neural Network (FFNN) processes tokens, it’s important to distinguish between embeddings and representations. Embedding refers to the static vector of the input tokens before any processing, while representation is the evolved vector after passing through the transformer layers, capturing contextual information.

The FFNN is a fully connected neural network that processes each token’s representation independently. It is applied to each token separately and in parallel, allowing the model to learn complex patterns within the data.

Additionally, the Transformer utilizes positional encoding to account for the order of words and multi head attention to capture different relationships between tokens.

-

High Level Overview of Transformers

Let us consider an example to understand Transformers at an abstract level. Deciding on the most likely explanation for why “I arrived at the office wet”. In the sentence I arrived at the office wet because… requires knowing whether the sentence ends in … it was raining outside or … I came by swimming.

Here, the Transformer model needs to take only a small, constant number of steps, based on the patterns learned from its training data. In each iteration, it applies the self attention mechanism to establish the relationship between each word in the sentence, regardless of their positions, in parallel. The Transformer can immediately deduce that it was raining, and thus, the final output would be: I arrived at the office wet because it was raining outside.

-

Encoding and Decoding in the Transformer Model

A natural language processing neural network typically consists of an encoder and a decoder. The encoder reads the input sentence and generates a representation of it. The decoder then generates the output sentence, word by word, while referring to the representation produced by the encoder.

In the Transformer model, the encoder begins by generating representations for each word. It then uses the self attention mechanism to aggregate information from all other words, producing a word specific representation informed by the entire context. This process is performed in parallel for all words, resulting in a new set of representations.

Similarly, the decoder generates one word at a time from left to right. It not only attends to the previously generated words but also to the final representation generated by the encoder.

-

Attention Scoring and Weighting

For context, let us briefly understand the concept of weights. In a neural network, weights represent the strength of the connections between neurons (or, in our example, words). These weights are learned during the training process, and higher weights indicate stronger relationships between the connected elements.

Learning these patterns and understanding these relationships is crucial for making predictions. In a weighted average, each data point is assigned a different weight, which reflects its relative importance in the final average.

The Transformer compares each word to every other word in the sentence and assigns attention scores. These scores determine how much each word should contribute to the next representation. The higher the attention score, the greater the influence a word has on the representation.

Building on the example discussed above, the word raining would likely receive a high attention score. The attention scores are then used as weights to compute a weighted average of all the words, which is passed through a fully connected feed forward network to generate the new representation.

-

Visualizing Attention in Transformers

An intriguing feature of the Transformer is that it allows us to visualize which parts of a sentence the network focuses on while processing or translating a specific word, providing insights into how information flows within the network. By examining the attention weights, we can gain a deeper understanding of the model’s decision making process.

If you would like to understand encoding and decoding through animation, and gain insights into how information flows through the network visually, I recommend reading Google’s blog post Transformer: A Novel Neural Network Architecture for Language Understanding.

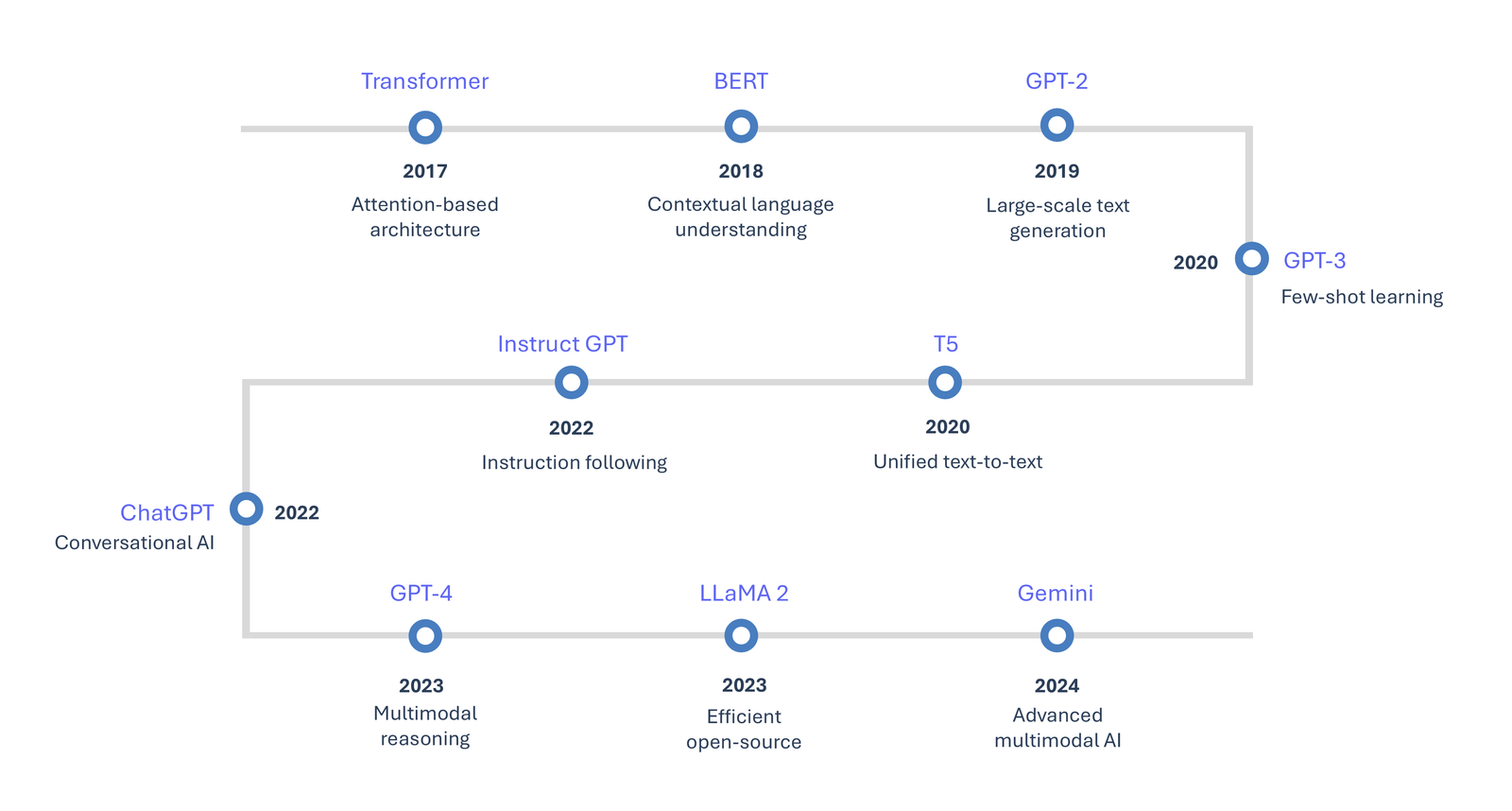

Evolution Beyond the Original Transformer

Figure 04: Evolution Beyond the Original Transformer

BERT (Bidirectional Encoder Representations from Transformers), developed by Google in 2018, repurposed the Transformer’s encoder to excel at tasks such as classification, named entity recognition, and question answering by leveraging deep contextual understanding.

T5 (Text to Text Transfer Transformer), also developed by Google Research, treats all natural language processing tasks as a text to text problem, excelling in areas like text generation, translation, and summarization. However, both BERT and T5 face limitations in human like comprehension, particularly in real world reasoning and long distance dependencies.

GPT (Generative Pre trained Transformer), which focuses on the decoder of the Transformer, excels in few shot learning but is limited by its unidirectional nature.

Popular models including BERT, GPT, Bard, LLaMA, and Gemini all trace their origins back to Google’s Transformer architecture.

Some models’ exact public availability can be gradual or region limited, but these dates reflect the main public launches or API access. Many recent AI models and applications, like Anthropic’s Claude and DeepSeek, are built on Transformer technology. They are not included in this timeline (Figure 04) because it shows only major foundational model releases.

Transformers in Software

At the enterprise level, the Transformer revolution has brought evident changes in several key areas, including search engines, recommendation systems, code generation, and knowledge bases.

In search engines, Transformer models such as BERT improve query understanding by capturing the context of words, resulting in more relevant and accurate search results. Google Search and Bing are prime examples of search engines leveraging these models to enhance their performance.

Recommendation systems employ Transformer models like those used by Netflix and Amazon to provide personalized suggestions by effectively identifying and interpreting user preferences and patterns in data, leading to better user engagement.

Figure 05: Transformers in Software

In code generation, Transformer based models such as CodeBERT and GitHub Copilot can understand and generate code, assisting developers with tasks such as code completion, bug detection, and even writing code from scratch.Similarly, in knowledge bases, Transformers like those powering Google Knowledge Graph and IBM Watson enable context aware responses, allowing for more efficient retrieval of relevant information and better understanding of user queries, thereby enhancing the overall user experience.

Business Impact of Transformer

Klarna, a leading FinTech company, has integrated OpenAI technology, which is built on Transformer architecture. This AI implementation has transformed Klarna’s customer service operations with impressive results:

-

- Handled 2.3 million conversations, covering two thirds of customer service chats

-

- Equivalent to the workload of approximately 700 full time agents

-

- Customer satisfaction scores comparable to those of human agents

-

- Repeat queries decreased by 25 percent

-

- Customers now complete requests in under 2 minutes, down from 11 minutes

-

- AI assistants deployed across 23 markets, operating 24/7

-

- Communication fluent in 35 languages

These advancements are estimated to contribute significantly to Klarna’s projected 40 million US dollars profit in 2024.

The Amazon Search team reduced inference costs by 85 percent using AWS Inferentia, custom designed AWS chips optimised for deep learning and generative AI inference workloads. Amazon’s prediction system leverages Transformer based architectures, specifically models built on BERT, to power its mechanisms.

Within the banking industry, Citizens Bank observed a 20 percent productivity boost among a group of engineers experimenting with generative AI. Deloitte predicts that effectively deploying AI tools across the software development lifecycle could enable banks to achieve savings of 20 to 40 percent in software investments by 2028.

Research on GitHub Copilot quantifies its impact on developer productivity and satisfaction. Developers using Copilot completed tasks 55 percent faster, with 74 percent reporting increased satisfaction and well being.

Thanks to Google’s Transformer revolution, these achievements across industries are now a reality.

Future Trends and Challenges

Looking forward, the Transformer revolution continues to evolve. Emerging trends include the development of more efficient architectures that reduce computational costs, enabling wider accessibility. Multimodal Transformers that combine text, images, and other data types promise richer, more intuitive AI interactions.

However, challenges remain: ethical considerations such as bias and transparency, the environmental impact of large model training, and the need for explainability to foster user trust. Furthermore, as Transformers are embedded deeper into critical systems, regulatory frameworks and governance will become increasingly important.

The transformation across industries will have a significant ripple effect on technology consulting firms and IT service providers. Just as the industry evolved from legacy systems to service oriented architectures and then microservices, alongside the rise of Agile and DevOps, AI integration will become a central focus.

Addressing these challenges while pushing the boundaries of capability will define the next chapter of Transformer driven AI.

Conclusion

Bringing it all together, the challenges of sequential processing, long distance dependencies, and reduced accuracy led to the birth of Google’s Transformer. This new architecture and its open source library eliminated the need for step by step processing, delivering significant improvements in both quality and computational efficiency. Breakthrough components within the Transformer blocks such as self attention, relationship scoring, and parallel information processing, enabled this leap forward.

Since the introduction of Transformers, the field has witnessed increasingly powerful models that tackle complex tasks including understanding, classification, and generation. Industry leaders such as Google, Amazon, Netflix, Microsoft, and OpenAI have deeply integrated Transformer technology into their products and services, fundamentally reshaping the software landscape.

Looking ahead, enterprise adoption of Transformer based AI is set to expand across a wide range of sectors and organizational levels. A key focus for most organizations will be optimization at every stage, ensuring these advanced models deliver maximum efficiency and tangible business value.