Introduction

Financial institutions do not struggle to access market data. The real challenge is interpreting movements quickly, consistently and with context. Dashboards and feeds highlight price changes, yet they rarely clarify whether anything is meaningful or requires a response.

This article puts the agent-first architecture from my previous blog into practice by implementing an autonomous Market Data Agent that reasons over live information and delivers decision-ready insight. The focus is practical. The objective is to show how an agent can behave predictably, scale responsibly and stay suitable for production environments.

The design is guided by one principle. AI should be optional rather than foundational. The agent must be useful without a model and become smarter only when AI is available. This mirrors how engineering teams introduce AI in regulated financial settings where reliability, governance and cost control take priority.

The full implementation referenced in this article is available on GitHub as the Market Data Agent, for those who want to explore the design and code in more detail.

The Gap in Today’s Market Data Systems

Most market data platforms are strong at collection but weak at interpretation. They deliver streams of prices and charts but stop short of explaining whether a movement is relevant, unusual or strategically important. Teams are still expected to manually determine what matters and what does not.

At the other end of the spectrum, systems that depend heavily on AI often introduce operational fragility. They can be expensive to run, difficult to audit and harder to govern at scale. This creates an uncomfortable trade-off between reliability and intelligence.

The purpose of this project is to test a middle path. The question is straightforward. Can we design an autonomous agent that performs reliably without AI and becomes more insightful when AI is available, without making AI a dependency? The goal is a small agent that fetches market data, identifies meaningful changes and explains them in clear language, while retaining the option to add deeper reasoning through an LLM when required.

If the design works, it becomes a repeatable blueprint for practical, production ready agents in other domains.

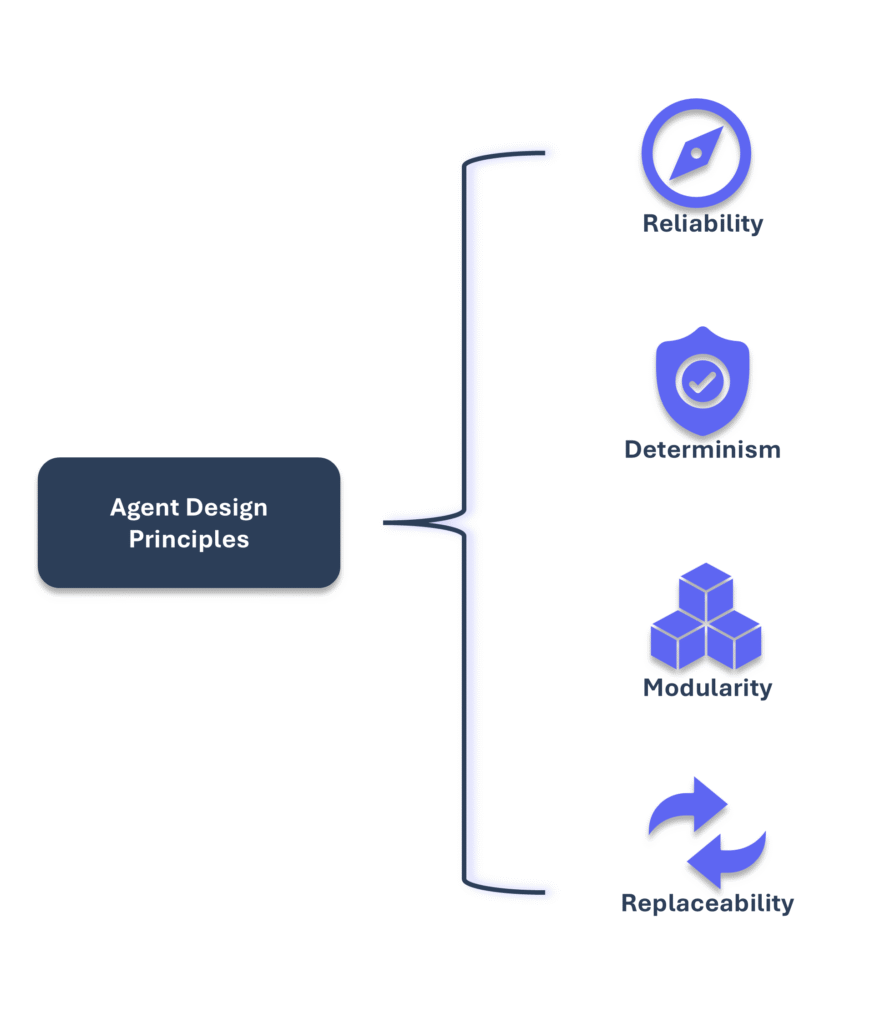

Agent Design Principles

The agent follows a simple rule. It must be useful before it becomes intelligent. The core behaviour focuses on a small set of deterministic tasks that do not rely on any model. It fetches market data, applies clear reasoning logic and produces a summary that can stand on its own. This keeps the system predictable, auditable and safe to run in environments where reliability and governance take priority.

At the same time, the design leaves room for intelligence to grow. Each capability is modular so reasoning logic, anomaly rules and data sources can evolve without redesigning the foundation. The agent delivers value immediately while still being structured for deeper reasoning when the organisation is ready to enable AI.

Figure-01: Agent Design Principles

This balance is what makes agent first design practical for real engineering teams. It gives teams a strong baseline today and a clear path toward more advanced behaviour in the future.

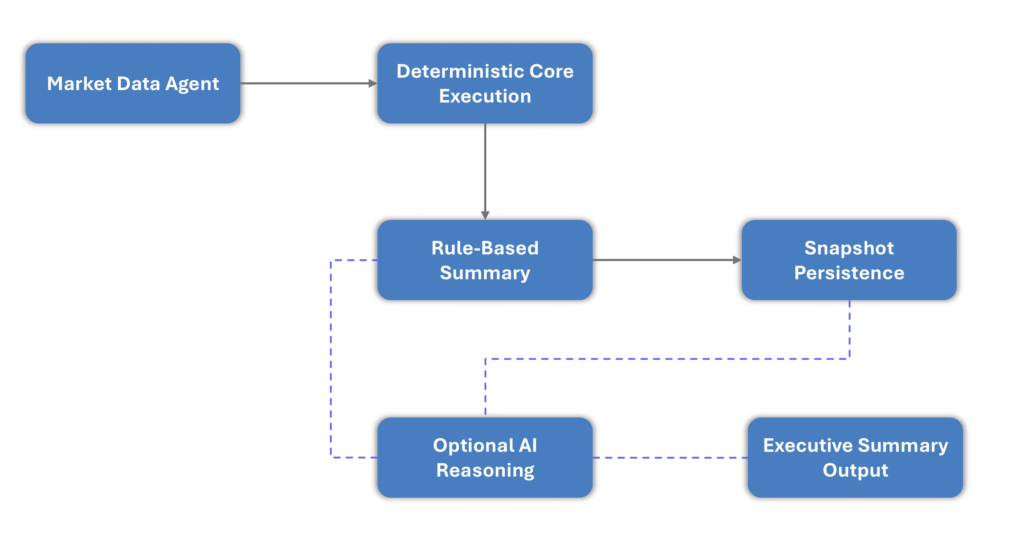

Architecture Overview

The architecture separates the agent’s operational workflow from the optional AI reasoning component. The core pipeline runs independently and does not rely on an LLM. This avoids the risk of coupling model availability with day to day execution. It also keeps the system compliant with environments that restrict external AI services.

Figure-02: High-Level Architecture of the Market Data Agent

At a high level, the core loop performs four deterministic functions. It fetches live market data, normalises it into a common structure, detects significant price movements and produces a rule based summary. This guarantees that the agent always completes its job regardless of whether AI is active.

The LLM sits outside the critical path. It receives the core summary only when deeper interpretation is required. This boundary gives teams full control over when AI is used and makes the entire system easier to govern. The agent behaves the same in standard mode and AI enhanced mode, which keeps reliability, auditability and cost predictable.

“AI should enhance reliability, not replace deterministic systems.”

LLM as a Decoupled Reasoning Component

The Market Data Agent always completes its workflow without needing a model. It fetches data, detects movements and generates a clear rule based summary. This deterministic output forms the trusted baseline that can run in any environment, including those where AI is restricted or unavailable.

The LLM is implemented as a separate reasoning module rather than being embedded inside the core loop. When activated, it takes the baseline summary and converts it into a leadership focused briefing with clearer interpretation and context. When not activated, the core result is delivered as it is. No part of the operational pipeline depends on AI.

This separation provides practical benefits. AI usage can be controlled through configuration rather than code changes. The model can be upgraded or replaced without affecting the rest of the agent. Most importantly, the organisation retains control over reliability, governance and cost while still gaining the option to apply deeper reasoning when it is needed.

Implementation

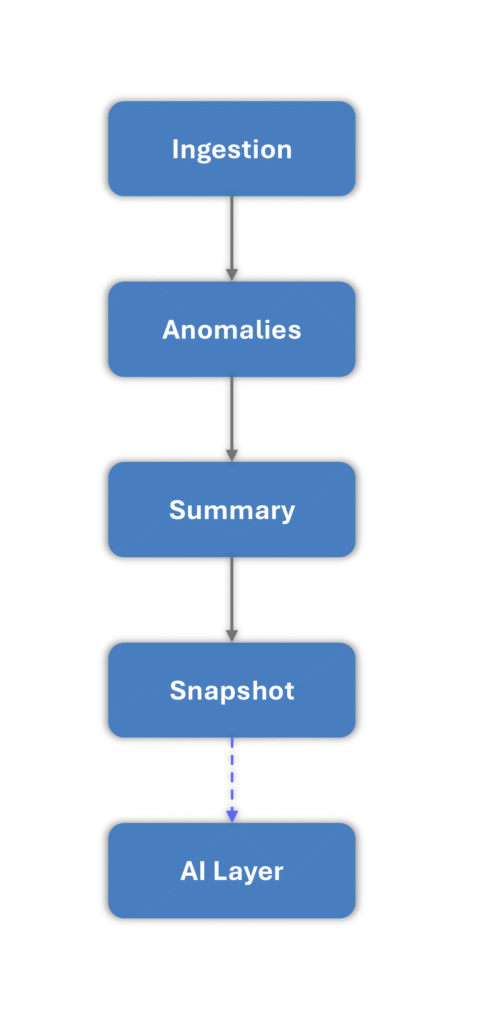

Core Phases of Agent

The implementation follows a modular flow designed to be easy to extend and simple to audit. The agent starts by fetching live crypto and FX data and converting everything into a consistent internal structure. A lightweight anomaly detector then identifies the most meaningful movers so the first layer of reasoning is transparent and predictable. A rule based summariser converts those movements into a short, readable explanation that does not rely on any model.

Each execution produces both a versioned JSON snapshot and a Markdown summary. This gives the agent a basic memory of its runs, supports comparison across time and makes debugging straightforward. Every stage is deliberately small and isolated so the workflow remains clear even as the system expands.

The outcome is a deterministic pipeline that always delivers the same type of result regardless of whether AI is enabled. It reflects how engineering teams introduce agents into existing platforms by starting with minimal, reliable and well scoped capability that can scale over time.

Standard Mode vs AI-Augmented Mode

The agent can operate in two configurations. Both use the same core pipeline. The only difference is whether the LLM reasoning module is switched on.

In Standard Mode, the agent runs entirely on deterministic logic. It detects significant movements and produces a concise rule based summary. This is the default mode and is suitable for environments where AI is restricted, unnecessary or deliberately disabled to control cost.

In AI Augmented Mode, the LLM takes the core summary and rewrites it into a leadership friendly briefing with clearer interpretation and context. The LLM never replaces the base logic. It enhances it only when there is value.

The active mode is controlled through configuration or policy rather than code changes. This allows organisations to enable AI only when it adds value, manage cost and governance deliberately, and preserve consistent operational behaviour. In both modes, the agent remains autonomous and reliable.

Why This Design Works in the Real World

This design reflects how organisations adopt AI in production. The agent delivers consistent value without AI and introduces deeper reasoning only when it provides measurable benefit. Reliability is never traded for intelligence.

The deterministic core gives teams a stable baseline. It is predictable, auditable and cost efficient. AI becomes an optional enhancement rather than an operational dependency. This makes it easier to satisfy controls in financial environments where availability, governance and cost monitoring are non-negotiable.

“Deterministic logic is the foundation of trustworthy agents”

Key advantages in real settings:

- The baseline behaviour is predictable because the core logic is deterministic

- AI can be enabled or disabled through configuration rather than code changes

- Cost is easier to manage because AI runs only when needed

- The reasoning module can be upgraded independently of the core pipeline

- Teams can scale from rule based output to AI enhanced insight at their own pace

The result is a blueprint that aligns with real production constraints. It allows teams to gain value immediately while giving them a controlled path to expand AI capability over time.

Engineering Insights

A key learning from this build is that simplicity is not a limitation. It is a strategic enabler. By expressing the agent as a collection of small, independent modules, the system becomes easier to extend without losing clarity or reliability. This creates a clean path for additional capabilities without redesigning what already works.

Another important takeaway is the value of replaceability. AI models, providers and pricing will continue to change. Designing the reasoning component so it can be swapped without affecting the agent protects against vendor lock in and keeps the architecture useful as the landscape evolves.

This approach also reflects how AI is deployed inside regulated industries. Organisations welcome deeper reasoning, but only when it does not compromise control, auditability or operational stability. Architectures that combine autonomy with optional AI adoption are more suitable for trading, risk and financial services, where reliability and governance are non-negotiable.

Next Steps: Multi-Agent Path

“Start small, scale capability, maintain control”

This implementation focuses on a single agent, but the design opens a natural path toward a multi agent system. Because each capability sits behind a clear boundary, it becomes straightforward to introduce new agents that specialise in different areas without disturbing what already works.

For example, one agent could continue monitoring crypto movements, another could focus on FX volatility and a third could analyse equities or sector specific indicators. Each agent would have a focused purpose and a clear output, rather than one large system trying to interpret everything.

Growth does not mean more complexity. It means adding capability in small, targeted increments that align with organisational priorities. Agents can run independently or exchange conclusions when useful, without becoming tightly coupled. This leads to an intelligence layer that scales gradually while preserving the same reliability and clarity that made the first agent valuable from day one.

Conclusion

This work demonstrates that an autonomous agent can be both reliable and intelligent without compromising operational stability. A deterministic reasoning loop delivers value from the first run, and an optional AI layer adds interpretation only when it improves the outcome. This balance makes the design suitable for production environments where predictability, governance and cost control matter as much as insight.

By translating agent first ideas into a working implementation, we now have a practical pattern for introducing AI in a controlled and incremental way. The goal is not to maximise intelligence for its own sake. The goal is to integrate intelligence where it brings measurable advantage, while keeping the underlying system transparent and dependable.

This is the direction in which real AI platforms are evolving. It also forms a strong foundation for a gradual transition toward multi agent ecosystems that grow through small, disciplined and focused steps.